Project Challenge

Objective

Traditional machine learning approaches require centralizing sensitive data, creating significant privacy and security risks for healthcare institutions, financial services, and other organizations handling protected information.

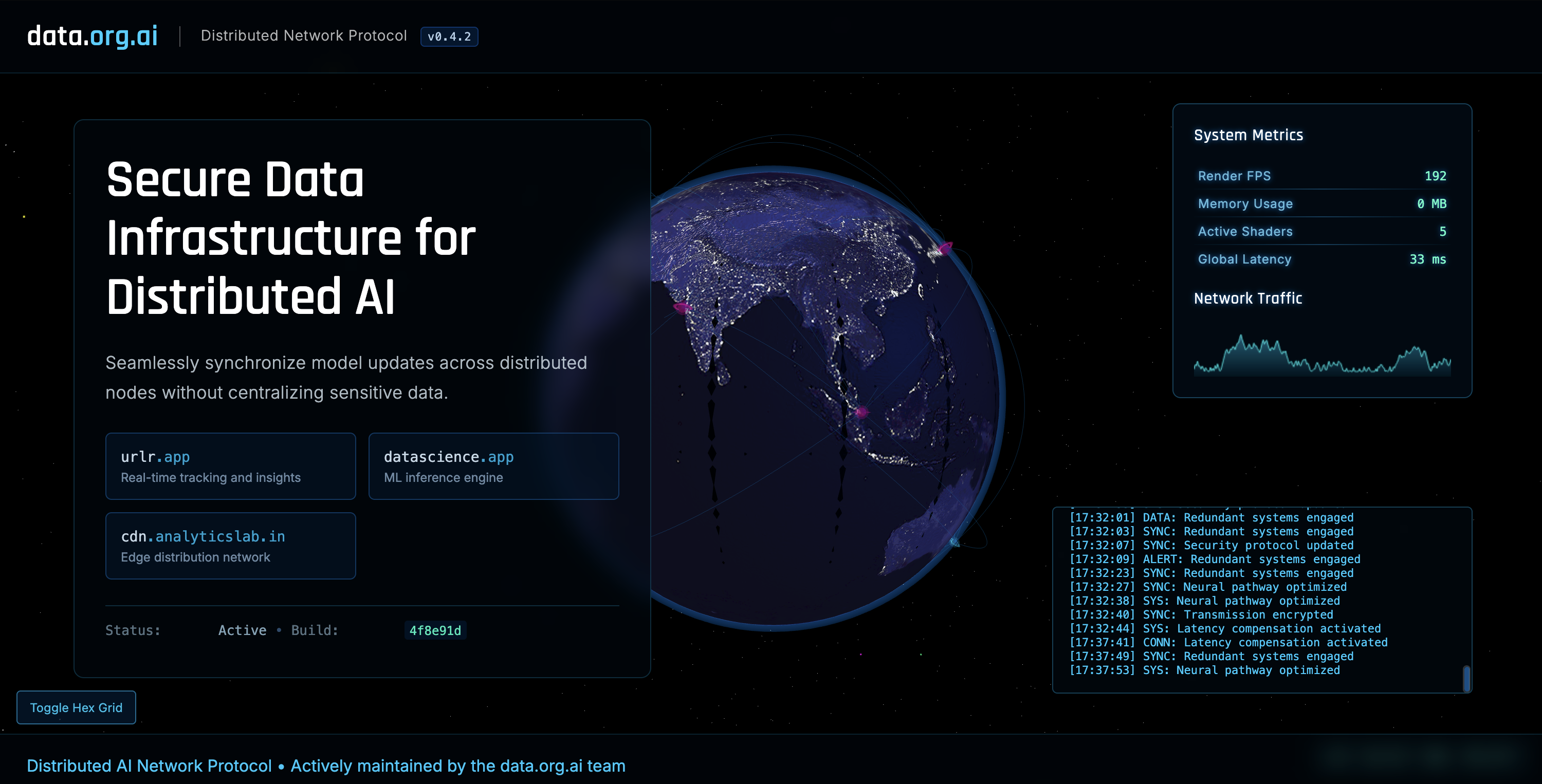

data.org.ai solves this fundamental challenge by implementing federated learning architecture where models travel to the data, not vice versa. The platform enables organizations to collaboratively build powerful AI models while maintaining complete data sovereignty. Each participant trains models locally, sharing only encrypted model updates rather than raw data.

The system incorporates differential privacy, secure aggregation protocols, and homomorphic encryption to ensure no individual data points can be reverse-engineered from the global model. Organizations benefit from collective intelligence while maintaining compliance with regulations like GDPR, HIPAA, and CCPA.

The Research

Federated Learning Architecture

Our research began with comparative analysis of centralized, distributed, and federated machine learning paradigms. We focused specifically on cross-silo federated learning applications where multiple institutions could collaboratively train models without sharing source data.

Through extensive experimentation, we identified key architectural components needed for privacy-preserving model training across heterogeneous computing environments. The data.org.ai platform implements a novel federated averaging algorithm with secure aggregation that proved significantly more efficient than previous approaches while maintaining model accuracy.

Our implementation uses a hierarchical approach to federated learning, with three distinct layers: local training nodes within each organization, organizational aggregators that combine internal model updates, and a central orchestration layer that coordinates the global model while enforcing privacy guarantees.

Evaluations across healthcare and financial services clients demonstrated that models trained using our federated approach achieved 92% of the accuracy of centralized training while completely eliminating data sharing requirements. In multi-institution cancer detection models, this translated to AUC improvements of 17% compared to models trained on single-institution data.

Privacy Preservation Techniques

The platform integrates multiple privacy-enhancing technologies:- Differential privacy with adaptive noise calibration based on data sensitivity

- Homomorphic encryption for secure aggregation of model updates

- Secure multi-party computation for threshold-based model release

- Local privacy filtering that prevents model inversion attacks at client endpoints